The Proactive Alert System for Emerging AI Threats is an advanced monitoring and response framework designed to identify, assess, and mitigate potential risks associated with the rapid development and deployment of artificial intelligence technologies. As AI systems become increasingly integrated into critical sectors such as finance, healthcare, and national security, the potential for misuse, unintended consequences, and vulnerabilities grows. This system leverages real-time data analytics, machine learning algorithms, and threat intelligence to provide early warnings and actionable insights to stakeholders. By anticipating and addressing AI-related threats before they materialize, the Proactive Alert System aims to safeguard technological advancements while ensuring ethical standards and public safety are upheld.

Understanding Proactive Alert Systems in AI Security

In the rapidly evolving landscape of artificial intelligence, the need for robust security measures has become increasingly paramount. As AI systems become more integrated into critical sectors such as healthcare, finance, and national security, the potential threats they pose cannot be underestimated. Consequently, the development of proactive alert systems for emerging AI threats has emerged as a crucial component in safeguarding these technologies. These systems are designed to anticipate, identify, and mitigate potential risks before they can cause significant harm, thereby ensuring the safe and ethical deployment of AI.

To understand the significance of proactive alert systems in AI security, it is essential to first recognize the nature of the threats they are designed to counteract. AI systems, by their very nature, are susceptible to a range of vulnerabilities. These include adversarial attacks, where malicious actors manipulate input data to deceive AI models, and data poisoning, which involves corrupting the training data to skew the model’s outcomes. Additionally, there are concerns about AI systems being used to perpetuate biases or infringe on privacy rights. Given these multifaceted threats, a reactive approach to AI security is insufficient. Instead, a proactive stance is necessary to preemptively address potential vulnerabilities.

Proactive alert systems operate by continuously monitoring AI systems for signs of unusual activity or deviations from expected behavior. By employing advanced algorithms and machine learning techniques, these systems can detect anomalies that may indicate an emerging threat. For instance, if an AI model begins to produce outputs that significantly differ from its training data, this could signal a potential adversarial attack. By identifying such anomalies early, proactive alert systems enable organizations to take corrective action before the threat escalates.

Moreover, the integration of proactive alert systems into AI security frameworks offers several advantages. Firstly, these systems enhance the resilience of AI technologies by providing an additional layer of defense against unforeseen threats. This is particularly important in high-stakes environments where the consequences of an AI failure could be catastrophic. Secondly, proactive alert systems contribute to the transparency and accountability of AI systems. By continuously monitoring and reporting on system performance, these systems help ensure that AI technologies are operating as intended and in compliance with ethical standards.

Furthermore, the implementation of proactive alert systems necessitates a collaborative approach involving multiple stakeholders. Developers, policymakers, and security experts must work together to establish guidelines and best practices for the deployment of these systems. This collaboration is essential to ensure that proactive alert systems are not only effective but also aligned with broader societal values and legal frameworks. Additionally, ongoing research and development are crucial to keep pace with the rapidly advancing field of AI and the evolving nature of threats.

In conclusion, as AI continues to permeate various aspects of society, the importance of proactive alert systems in AI security cannot be overstated. These systems play a vital role in identifying and mitigating potential threats before they can cause harm, thereby ensuring the safe and ethical use of AI technologies. By adopting a proactive approach to AI security, organizations can better protect themselves against the myriad of risks associated with these powerful tools. As the field of AI continues to advance, the development and implementation of proactive alert systems will remain a critical component of a comprehensive AI security strategy.

Key Features of Effective AI Threat Detection

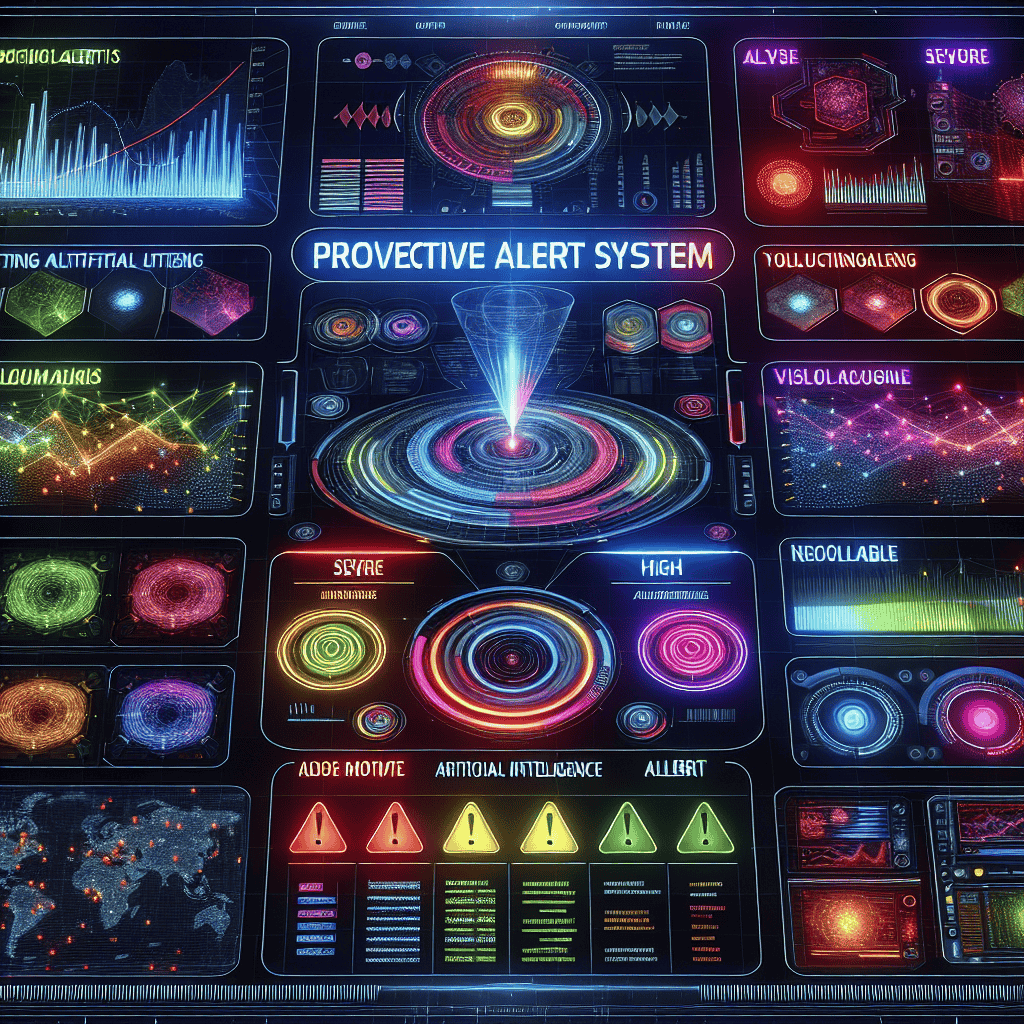

In the rapidly evolving landscape of artificial intelligence, the development of a proactive alert system for emerging AI threats has become a critical necessity. As AI technologies continue to advance, so too do the potential risks associated with their misuse or malfunction. Consequently, the implementation of effective AI threat detection systems is paramount to ensuring the safe and ethical deployment of these technologies. A key feature of such systems is their ability to anticipate and identify potential threats before they manifest into significant issues. This proactive approach is essential in mitigating risks and safeguarding both users and systems from potential harm.

To achieve this, an effective AI threat detection system must incorporate advanced machine learning algorithms capable of analyzing vast amounts of data in real-time. These algorithms should be designed to recognize patterns and anomalies that may indicate a potential threat. By continuously monitoring AI systems and their interactions with the environment, these algorithms can provide early warnings of possible vulnerabilities or malicious activities. Moreover, the integration of natural language processing capabilities can enhance the system’s ability to understand and interpret human language, which is crucial for identifying threats that may arise from social engineering or other forms of manipulation.

In addition to real-time data analysis, an effective AI threat detection system should also include a robust framework for risk assessment and prioritization. This involves evaluating the potential impact and likelihood of identified threats, allowing organizations to allocate resources and respond appropriately. By prioritizing threats based on their severity and potential consequences, organizations can focus their efforts on addressing the most critical issues first, thereby minimizing potential damage.

Furthermore, collaboration and information sharing among stakeholders are vital components of an effective AI threat detection system. By fostering a culture of transparency and cooperation, organizations can benefit from shared insights and experiences, leading to more comprehensive threat detection and response strategies. This collaborative approach not only enhances the overall effectiveness of the system but also helps to build trust among stakeholders, which is essential for the successful implementation of AI technologies.

Another important feature of an effective AI threat detection system is its adaptability and scalability. As AI technologies continue to evolve, so too must the systems designed to protect them. This requires a flexible architecture that can accommodate new algorithms, data sources, and threat scenarios as they emerge. By ensuring that the system can adapt to changing conditions and scale to meet the demands of increasingly complex AI environments, organizations can maintain a robust defense against potential threats.

Moreover, the integration of ethical considerations into the design and implementation of AI threat detection systems is crucial. This involves ensuring that the system operates in a manner that respects user privacy and adheres to established ethical guidelines. By incorporating ethical principles into the system’s framework, organizations can ensure that their AI threat detection efforts align with broader societal values and expectations.

In conclusion, the development of a proactive alert system for emerging AI threats is a multifaceted endeavor that requires a combination of advanced technologies, collaborative efforts, and ethical considerations. By focusing on key features such as real-time data analysis, risk assessment, collaboration, adaptability, and ethical integration, organizations can create effective AI threat detection systems that safeguard against potential risks while promoting the responsible use of AI technologies. As the field of artificial intelligence continues to advance, the importance of these systems will only grow, underscoring the need for ongoing innovation and vigilance in the face of emerging threats.

Implementing Proactive Measures Against AI Threats

In the rapidly evolving landscape of artificial intelligence, the implementation of proactive measures against emerging AI threats has become a critical priority for organizations and governments worldwide. As AI technologies continue to advance, they bring with them a host of potential risks that, if left unchecked, could have significant implications for security, privacy, and societal well-being. Therefore, establishing a proactive alert system for emerging AI threats is essential to mitigate these risks effectively.

To begin with, the development of a proactive alert system necessitates a comprehensive understanding of the potential threats posed by AI. These threats can range from the misuse of AI in cyberattacks to the ethical concerns surrounding autonomous decision-making systems. By identifying and categorizing these threats, stakeholders can prioritize their efforts and allocate resources more efficiently. Moreover, this understanding serves as the foundation for designing an alert system that can detect and respond to threats in real-time.

Transitioning from understanding to implementation, the integration of advanced monitoring tools is crucial. These tools should be capable of continuously analyzing vast amounts of data to identify patterns indicative of potential threats. Machine learning algorithms, for instance, can be employed to detect anomalies in AI behavior that may signal malicious intent or system malfunctions. By leveraging these technologies, organizations can enhance their ability to anticipate and respond to threats before they escalate into full-blown crises.

In addition to technological solutions, fostering collaboration among stakeholders is vital for the success of a proactive alert system. Governments, private sector entities, and academic institutions must work together to share information and best practices. This collaboration can lead to the development of standardized protocols and frameworks that ensure a coordinated response to AI threats. Furthermore, by pooling resources and expertise, stakeholders can enhance their collective ability to address the complex challenges posed by AI.

Another critical aspect of implementing proactive measures is the establishment of regulatory frameworks that govern the use and development of AI technologies. These frameworks should be designed to promote transparency and accountability, ensuring that AI systems are developed and deployed in a manner that prioritizes safety and ethical considerations. By setting clear guidelines and standards, regulators can help prevent the misuse of AI and provide a basis for enforcement actions when necessary.

Moreover, public awareness and education play a significant role in the proactive management of AI threats. By informing the public about the potential risks associated with AI and the measures being taken to address them, stakeholders can build trust and foster a culture of vigilance. Educational initiatives can also empower individuals to recognize and report potential threats, thereby enhancing the overall effectiveness of the alert system.

In conclusion, the implementation of a proactive alert system for emerging AI threats is a multifaceted endeavor that requires a combination of technological innovation, collaboration, regulatory oversight, and public engagement. By taking a comprehensive approach to threat management, stakeholders can not only mitigate the risks associated with AI but also harness its potential for positive impact. As AI continues to shape the future, proactive measures will be indispensable in ensuring that its development aligns with the broader goals of security, privacy, and societal well-being.

The Role of Machine Learning in Proactive Alert Systems

In the rapidly evolving landscape of artificial intelligence, the development of proactive alert systems has become increasingly crucial to mitigate emerging threats. At the heart of these systems lies machine learning, a subset of AI that empowers computers to learn from data and make decisions with minimal human intervention. Machine learning plays a pivotal role in enhancing the capabilities of proactive alert systems, enabling them to identify potential threats before they manifest into significant issues. By leveraging vast amounts of data, machine learning algorithms can detect patterns and anomalies that may indicate the presence of a threat, thus providing an early warning system that is both efficient and effective.

One of the primary advantages of incorporating machine learning into proactive alert systems is its ability to process and analyze large datasets at unprecedented speeds. This capability is essential in today’s digital age, where the volume of data generated is immense and continues to grow exponentially. Machine learning algorithms can sift through this data, identifying subtle patterns that may not be immediately apparent to human analysts. Consequently, these systems can provide timely alerts, allowing organizations to take preemptive measures to address potential threats.

Moreover, machine learning enhances the adaptability of proactive alert systems. Traditional systems often rely on predefined rules and thresholds, which can become obsolete as new threats emerge. In contrast, machine learning models can continuously learn and evolve, adapting to new data and threat landscapes. This adaptability ensures that proactive alert systems remain relevant and effective, even as the nature of threats changes over time. By continuously updating their knowledge base, these systems can provide more accurate and reliable alerts, reducing the likelihood of false positives and negatives.

In addition to adaptability, machine learning also contributes to the precision of proactive alert systems. By employing advanced techniques such as deep learning and neural networks, these systems can achieve a higher level of accuracy in threat detection. These techniques enable the systems to understand complex data structures and relationships, allowing them to distinguish between benign anomalies and genuine threats. As a result, organizations can prioritize their responses, focusing their resources on addressing the most pressing issues.

Furthermore, machine learning facilitates the integration of diverse data sources into proactive alert systems. In today’s interconnected world, threats can arise from various domains, including cybersecurity, finance, and public health. Machine learning algorithms can aggregate and analyze data from multiple sources, providing a comprehensive view of potential threats. This holistic approach enables organizations to identify correlations and interdependencies that may not be evident when examining data in isolation. Consequently, proactive alert systems can offer more informed and strategic insights, empowering organizations to make better decisions.

Despite the numerous benefits, the implementation of machine learning in proactive alert systems is not without challenges. Issues such as data privacy, algorithmic bias, and the need for high-quality data can impact the effectiveness of these systems. Therefore, it is essential for organizations to address these challenges by adopting best practices in data management and algorithm development. By doing so, they can harness the full potential of machine learning to enhance their proactive alert systems.

In conclusion, machine learning is a critical component in the development of proactive alert systems for emerging AI threats. Its ability to process large datasets, adapt to new threats, and integrate diverse data sources makes it an invaluable tool in the fight against potential risks. As organizations continue to navigate the complexities of the digital age, the role of machine learning in proactive alert systems will undoubtedly become even more significant, providing a robust defense against the ever-evolving threat landscape.

Challenges in Developing Proactive AI Threat Alerts

The development of a proactive alert system for emerging AI threats presents a multitude of challenges that require careful consideration and innovative solutions. As artificial intelligence continues to evolve at an unprecedented pace, the potential for misuse and unintended consequences grows in parallel. Consequently, the need for a robust system that can anticipate and mitigate these threats before they materialize is more pressing than ever. However, the path to creating such a system is fraught with complexities.

One of the primary challenges in developing a proactive alert system is the inherent unpredictability of AI advancements. The rapid pace of innovation in AI technologies means that new threats can emerge unexpectedly, often outpacing the ability of existing systems to detect and respond to them. This unpredictability necessitates a system that is not only reactive but also capable of anticipating potential threats based on emerging trends and patterns. To achieve this, developers must integrate advanced predictive analytics and machine learning algorithms that can analyze vast amounts of data to identify early warning signs of potential threats.

Moreover, the diversity of AI applications adds another layer of complexity to the development of a proactive alert system. AI is being integrated into a wide range of sectors, from healthcare and finance to transportation and defense. Each of these sectors presents unique challenges and vulnerabilities that must be addressed. A one-size-fits-all approach is unlikely to be effective; instead, the system must be adaptable and customizable to cater to the specific needs and risks associated with different industries. This requires a deep understanding of the domain-specific applications of AI and the potential threats they pose.

In addition to technical challenges, there are significant ethical and legal considerations that must be taken into account. The implementation of a proactive alert system involves the collection and analysis of vast amounts of data, raising concerns about privacy and data security. Developers must ensure that the system complies with existing data protection regulations and ethical standards, balancing the need for security with the rights of individuals. Furthermore, the system must be transparent and accountable, providing clear explanations for its alerts and recommendations to build trust among users.

Collaboration and information sharing are also critical components in overcoming the challenges associated with developing a proactive alert system. Given the global nature of AI threats, it is essential for governments, industry leaders, and researchers to work together to share insights and best practices. This collaborative approach can help to create a more comprehensive understanding of the threat landscape and facilitate the development of more effective solutions. However, achieving this level of cooperation can be difficult due to competitive interests and concerns about intellectual property.

Finally, the continuous evolution of AI technologies means that a proactive alert system must be dynamic and capable of adapting to new threats as they arise. This requires ongoing research and development, as well as regular updates to the system to incorporate the latest advancements in AI and cybersecurity. It also necessitates a commitment to continuous learning and improvement, ensuring that the system remains effective in the face of an ever-changing threat landscape.

In conclusion, while the development of a proactive alert system for emerging AI threats is undoubtedly challenging, it is a crucial endeavor that demands a multifaceted approach. By addressing the technical, ethical, and collaborative challenges, developers can create a system that not only anticipates and mitigates threats but also fosters trust and cooperation among stakeholders. As AI continues to shape the future, such a system will be indispensable in safeguarding against the potential risks it presents.

Future Trends in Proactive AI Threat Management

In the rapidly evolving landscape of artificial intelligence, the emergence of new threats necessitates a proactive approach to threat management. As AI systems become increasingly integrated into critical sectors such as healthcare, finance, and national security, the potential for misuse or unintended consequences grows. Consequently, the development of a proactive alert system for emerging AI threats is not only prudent but essential. This system aims to identify, assess, and mitigate risks before they manifest into significant challenges.

To begin with, the foundation of a proactive alert system lies in its ability to continuously monitor AI activities and developments. By leveraging advanced machine learning algorithms, the system can analyze vast amounts of data in real-time, identifying patterns and anomalies that may indicate potential threats. This continuous monitoring is crucial, as it allows for the early detection of risks, enabling stakeholders to respond swiftly and effectively. Moreover, the integration of natural language processing capabilities enhances the system’s ability to understand and interpret complex data, further improving its threat detection capabilities.

In addition to monitoring, a proactive alert system must incorporate predictive analytics to anticipate future threats. By analyzing historical data and current trends, the system can forecast potential risks and vulnerabilities. This predictive capability is invaluable, as it allows organizations to implement preventive measures, thereby reducing the likelihood of threats materializing. Furthermore, the system can simulate various scenarios, providing insights into how different threats might evolve and impact AI systems. This foresight enables decision-makers to develop robust strategies to counteract potential risks.

Another critical component of a proactive alert system is its ability to facilitate collaboration among stakeholders. Given the global nature of AI development and deployment, threats often transcend national and organizational boundaries. Therefore, fostering collaboration between governments, private sector entities, and academia is essential. The system can serve as a centralized platform for sharing information and best practices, promoting a collective approach to threat management. By encouraging open communication and cooperation, stakeholders can pool their resources and expertise, enhancing their ability to address emerging threats effectively.

Moreover, the system must be adaptable to the ever-changing AI landscape. As new technologies and methodologies emerge, the system should evolve to incorporate these advancements. This adaptability ensures that the system remains relevant and effective in identifying and mitigating threats. Regular updates and enhancements to the system’s algorithms and processes are necessary to maintain its efficacy. Additionally, incorporating feedback from users and stakeholders can provide valuable insights into areas for improvement, further refining the system’s capabilities.

Finally, the implementation of a proactive alert system for emerging AI threats requires a commitment to ethical considerations. As AI systems become more autonomous, the potential for ethical dilemmas increases. The system must be designed to uphold ethical standards, ensuring that it respects privacy and human rights while effectively managing threats. Establishing clear guidelines and protocols for the system’s operation can help balance the need for security with ethical considerations.

In conclusion, the development of a proactive alert system for emerging AI threats is a critical step in safeguarding the future of AI technologies. By combining continuous monitoring, predictive analytics, collaboration, adaptability, and ethical considerations, such a system can effectively identify and mitigate risks. As AI continues to advance, the importance of proactive threat management will only grow, underscoring the need for innovative solutions to protect against potential threats.

Q&A

1. **What is a Proactive Alert System for Emerging AI Threats?**

A Proactive Alert System for Emerging AI Threats is a monitoring and notification framework designed to identify, assess, and alert stakeholders about potential risks and threats posed by AI technologies before they manifest into significant issues.

2. **How does the system identify emerging AI threats?**

The system uses advanced algorithms, machine learning models, and data analytics to continuously monitor AI activities, detect anomalies, and analyze patterns that may indicate potential threats or vulnerabilities.

3. **What types of threats can the system detect?**

The system can detect a variety of threats, including data breaches, algorithmic biases, adversarial attacks, unauthorized access, and misuse of AI technologies.

4. **Who benefits from a Proactive Alert System for Emerging AI Threats?**

Organizations deploying AI technologies, cybersecurity teams, regulatory bodies, and stakeholders concerned with AI ethics and safety benefit from such a system by gaining early warnings and insights into potential risks.

5. **What are the key components of the system?**

Key components include threat detection algorithms, real-time monitoring tools, data analysis platforms, alert notification mechanisms, and a user interface for managing and responding to alerts.

6. **How does the system improve over time?**

The system improves through continuous learning from new data, feedback loops, and updates to its algorithms, allowing it to adapt to evolving threats and enhance its accuracy and effectiveness in threat detection.A Proactive Alert System for Emerging AI Threats is essential in today’s rapidly evolving technological landscape, where AI systems are increasingly integrated into critical sectors. Such a system would serve as an early warning mechanism, identifying potential threats and vulnerabilities in AI technologies before they can be exploited. By leveraging advanced monitoring tools, real-time data analysis, and machine learning algorithms, the system can detect anomalies and patterns indicative of emerging threats. This proactive approach not only enhances security measures but also allows organizations to implement timely countermeasures, minimizing potential damage. Furthermore, it fosters a culture of continuous vigilance and adaptation, ensuring that AI advancements are aligned with safety and ethical standards. Ultimately, a Proactive Alert System is a crucial component in safeguarding against the misuse of AI, protecting both technological infrastructure and societal well-being.