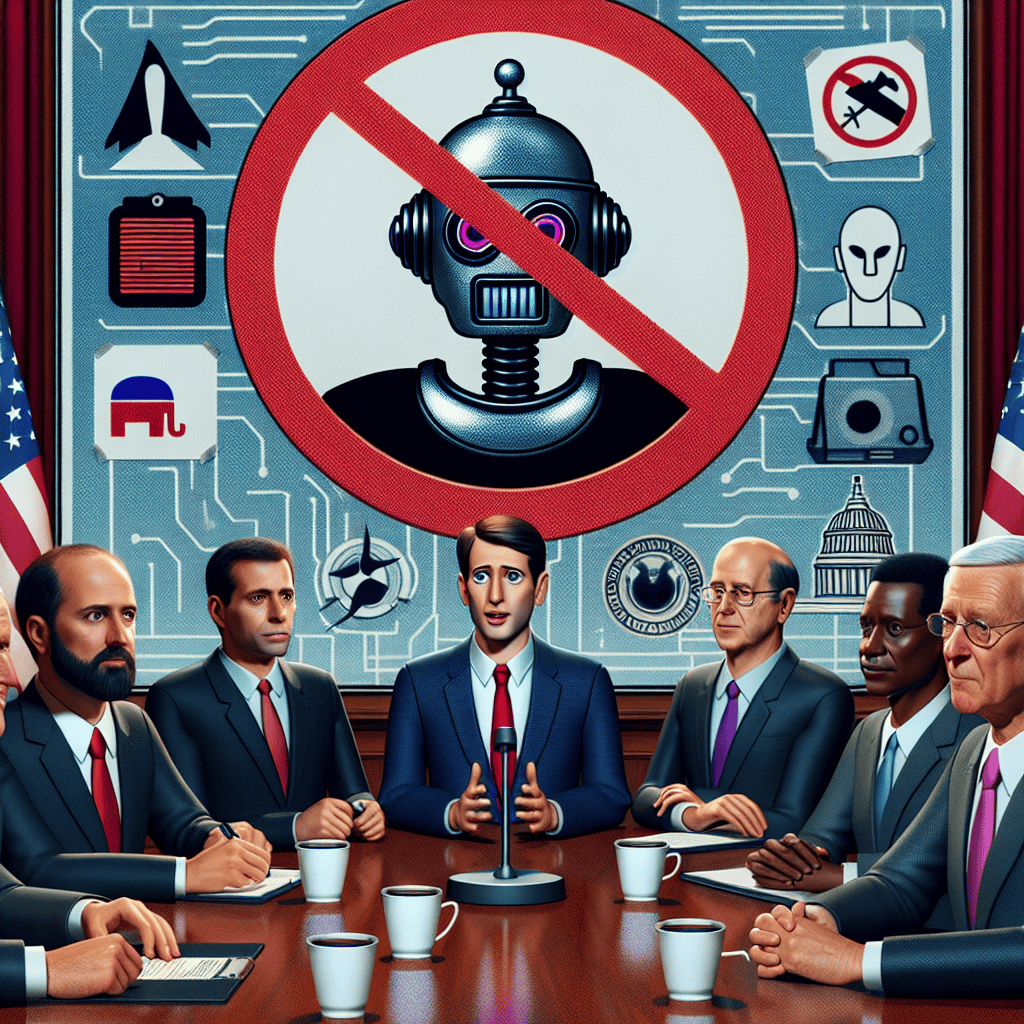

Lawmakers are urging a ban on the DeepSeek AI application for use on U.S. government devices, citing concerns over data security and potential risks associated with the app’s capabilities. The call for prohibition stems from fears that the application could compromise sensitive government information and undermine national security. As artificial intelligence technologies continue to evolve, legislators are increasingly scrutinizing their implications for privacy and safety, prompting a reevaluation of the tools permitted on official devices. This move reflects a growing awareness of the need to safeguard government operations from emerging technological threats.

Lawmakers’ Concerns Over DeepSeek AI App Security

In recent weeks, lawmakers have expressed significant concerns regarding the security implications of the DeepSeek AI application, particularly in relation to its use on U.S. government devices. As artificial intelligence continues to evolve and integrate into various sectors, the potential risks associated with its deployment in sensitive environments have come under scrutiny. The DeepSeek AI app, designed to enhance data analysis and streamline information retrieval, has garnered attention not only for its innovative capabilities but also for the vulnerabilities it may introduce to government operations.

One of the primary concerns raised by lawmakers revolves around the app’s data handling practices. Given that government devices often contain classified and sensitive information, the possibility of unauthorized access or data breaches becomes a pressing issue. Lawmakers have pointed out that the app’s architecture may not adhere to the stringent security protocols required for government use. This apprehension is compounded by the fact that AI applications frequently rely on vast datasets, which can inadvertently expose sensitive information if not properly managed. As a result, the potential for data leaks or misuse has prompted calls for a thorough review of the app’s security measures.

Moreover, the lawmakers have highlighted the implications of using third-party applications like DeepSeek AI, which may not be subject to the same rigorous security assessments as government-developed software. The reliance on external technology raises questions about the vetting processes in place to ensure that these applications do not compromise national security. In an era where cyber threats are increasingly sophisticated, the integration of unverified software into government systems could create vulnerabilities that adversaries might exploit. Consequently, lawmakers are advocating for a comprehensive evaluation of the app’s security framework before any further deployment on government devices.

In addition to concerns about data security, lawmakers have also raised issues regarding the ethical implications of using AI technologies in government operations. The potential for bias in AI algorithms, which can lead to skewed data interpretations and decision-making processes, has become a focal point of discussion. Lawmakers argue that the deployment of AI applications must be accompanied by transparency and accountability measures to ensure that they do not perpetuate existing biases or create new forms of discrimination. This perspective underscores the need for a balanced approach to AI integration, one that prioritizes both innovation and ethical considerations.

Furthermore, the rapid pace of technological advancement poses challenges for regulatory frameworks that govern the use of AI in government settings. Lawmakers have called for updated policies that address the unique risks associated with AI applications, emphasizing the importance of establishing clear guidelines for their deployment. By creating a robust regulatory environment, lawmakers aim to safeguard sensitive information while fostering an atmosphere conducive to technological innovation.

In light of these concerns, the call for a ban on the DeepSeek AI app for U.S. government devices reflects a broader apprehension about the intersection of technology and national security. As lawmakers continue to navigate the complexities of integrating AI into government operations, their focus remains on ensuring that security, ethics, and accountability are prioritized. Ultimately, the ongoing dialogue surrounding the DeepSeek AI app serves as a critical reminder of the need for vigilance in the face of rapidly evolving technologies, particularly when they intersect with the responsibilities of government.

Implications of Banning DeepSeek AI for Government Efficiency

The recent call by lawmakers to ban the DeepSeek AI application from government devices has sparked a significant debate regarding the implications of such a decision on government efficiency. As artificial intelligence continues to evolve and integrate into various sectors, its potential to enhance productivity and streamline operations cannot be overlooked. However, the concerns surrounding data security and privacy have prompted legislators to reconsider the use of such technologies within government frameworks.

One of the primary implications of banning DeepSeek AI is the potential slowdown in data processing and analysis capabilities. DeepSeek AI is designed to facilitate rapid information retrieval and analysis, which can significantly enhance decision-making processes within government agencies. By leveraging advanced algorithms, the application can sift through vast amounts of data, providing insights that are crucial for timely and informed policy-making. Without access to such tools, government employees may find themselves reverting to more traditional methods of data handling, which are often slower and less efficient. This could lead to delays in critical operations, ultimately affecting the responsiveness of government services.

Moreover, the ban could hinder innovation within government agencies. The integration of AI technologies like DeepSeek is not merely about efficiency; it also represents a shift towards a more modernized approach to governance. By embracing AI, government entities can explore new avenues for improving public services, enhancing citizen engagement, and optimizing resource allocation. A prohibition on such technologies may stifle this progress, leaving agencies reliant on outdated systems that do not meet the demands of a rapidly changing world.

In addition to the operational impacts, the decision to ban DeepSeek AI raises questions about the broader implications for the government’s relationship with technology. As the public sector increasingly seeks to adopt innovative solutions, a blanket ban on specific applications may create an atmosphere of caution and reluctance to explore new technologies. This could result in a missed opportunity for government agencies to harness the benefits of AI, ultimately placing them at a disadvantage compared to the private sector, which is often quicker to adopt and adapt to new technological advancements.

Furthermore, the ban could lead to increased costs associated with finding alternative solutions. Government agencies may need to invest in developing or procuring new software that meets their needs while also adhering to security protocols. This transition could be both time-consuming and financially burdensome, diverting resources away from other critical areas of public service. In contrast, maintaining access to established tools like DeepSeek AI could allow for a more seamless integration of technology into government operations, fostering a culture of continuous improvement.

While the concerns regarding data security and privacy are valid and must be addressed, it is essential for lawmakers to consider the potential consequences of a ban on DeepSeek AI. Striking a balance between safeguarding sensitive information and leveraging technological advancements is crucial for enhancing government efficiency. Instead of an outright ban, a more nuanced approach that includes stringent regulations and oversight may provide a pathway for the responsible use of AI technologies. By doing so, lawmakers can ensure that government agencies remain equipped to meet the challenges of the modern era while also protecting the integrity of public data. Ultimately, the decision regarding DeepSeek AI will have lasting implications for the future of government operations and its ability to serve the public effectively.

The Role of AI in Government: A Double-Edged Sword

The integration of artificial intelligence (AI) into government operations has sparked a significant debate regarding its benefits and potential risks. As lawmakers call for a ban on the DeepSeek AI app for U.S. government devices, it becomes increasingly important to examine the dual nature of AI in public administration. On one hand, AI technologies promise enhanced efficiency, improved decision-making, and the ability to process vast amounts of data quickly. These advantages can lead to more informed policies and streamlined services for citizens. For instance, AI can analyze trends in public health data, optimize resource allocation, and even predict potential crises before they escalate, thereby enabling proactive governance.

However, the very capabilities that make AI appealing also raise serious concerns about security, privacy, and ethical implications. The call for banning the DeepSeek AI app underscores these issues, as lawmakers express apprehension about the potential misuse of sensitive government data. The risk of data breaches and unauthorized access to confidential information is heightened when AI applications are involved, particularly those that utilize machine learning algorithms to analyze and store data. This concern is compounded by the fact that AI systems can sometimes operate as “black boxes,” making it difficult to understand how decisions are made or to hold systems accountable for their actions.

Moreover, the reliance on AI can inadvertently lead to biases in decision-making processes. If the data fed into AI systems is flawed or unrepresentative, the outcomes can perpetuate existing inequalities or create new forms of discrimination. This is particularly troubling in government contexts, where decisions can have far-reaching consequences for individuals and communities. As such, the ethical implications of deploying AI in public service must be carefully considered, ensuring that systems are designed to promote fairness and transparency.

In addition to ethical concerns, there is also the issue of dependency on technology. As governments increasingly turn to AI for various functions, there is a risk that human oversight may diminish. This reliance can lead to a lack of critical thinking and a diminished capacity for human judgment in decision-making processes. While AI can provide valuable insights, it should not replace the nuanced understanding that human officials bring to complex issues. Therefore, it is essential to strike a balance between leveraging AI’s capabilities and maintaining human oversight to ensure that decisions are made with a comprehensive understanding of the context.

Furthermore, the rapid pace of AI development poses challenges for regulatory frameworks. Lawmakers often find themselves playing catch-up, trying to establish guidelines that can keep pace with technological advancements. The call for a ban on specific applications like DeepSeek reflects a growing recognition that not all AI tools are suitable for government use. As such, it is crucial for policymakers to engage in ongoing dialogue with technology experts, ethicists, and the public to develop robust regulations that address the unique challenges posed by AI.

In conclusion, while AI holds the potential to transform government operations positively, it is essential to approach its integration with caution. The dual-edged nature of AI necessitates a thorough examination of its implications for security, ethics, and human oversight. As lawmakers navigate these complexities, the call for a ban on certain AI applications serves as a reminder of the need for careful consideration and responsible governance in the age of technology. Ultimately, the goal should be to harness the benefits of AI while safeguarding the values and principles that underpin democratic governance.

Public Response to the DeepSeek AI Ban Proposal

The proposal to ban the DeepSeek AI application from U.S. government devices has sparked a significant public response, reflecting a complex interplay of concerns regarding privacy, security, and technological advancement. As lawmakers voice their apprehensions about the potential risks associated with the use of this AI tool, citizens and experts alike are weighing in on the implications of such a ban. The discourse surrounding this issue is multifaceted, revealing a spectrum of opinions that highlight the challenges of integrating advanced technology into government operations.

Many individuals express support for the ban, citing the need to safeguard sensitive information from potential breaches. The DeepSeek AI application, which utilizes advanced algorithms to analyze vast amounts of data, raises alarms about the possibility of unauthorized access to classified materials. Proponents of the ban argue that the risks associated with using such technology on government devices outweigh the benefits. They emphasize that, in an era where cyber threats are increasingly sophisticated, it is imperative to prioritize national security and protect the integrity of governmental operations.

Conversely, there are voices in the public sphere advocating for a more nuanced approach. Some argue that outright banning the application may hinder innovation and the potential benefits that AI can bring to government efficiency. These individuals contend that rather than a blanket prohibition, a more effective strategy would involve implementing stringent regulations and oversight mechanisms to ensure that the use of AI tools like DeepSeek is conducted safely and responsibly. They suggest that with proper guidelines, the government could harness the capabilities of AI to improve decision-making processes and enhance public services without compromising security.

Moreover, the debate has also drawn attention to the broader implications of technology in governance. As citizens become increasingly aware of the role that AI plays in various sectors, there is a growing demand for transparency and accountability in how these technologies are deployed. Many members of the public are calling for clearer communication from lawmakers regarding the rationale behind the proposed ban and the criteria used to evaluate the risks associated with AI applications. This desire for transparency reflects a broader trend in society, where individuals seek to understand the impact of technology on their lives and the mechanisms that govern its use.

In addition to concerns about security and innovation, the discussion surrounding the DeepSeek AI ban also touches on ethical considerations. The potential for bias in AI algorithms and the implications of data privacy are critical issues that resonate with many citizens. As the public becomes more informed about the ethical dimensions of AI, there is an increasing expectation that lawmakers will address these concerns in their deliberations. This has led to calls for comprehensive assessments of AI technologies, ensuring that they align with democratic values and respect individual rights.

As the conversation continues to evolve, it is clear that the public response to the DeepSeek AI ban proposal is not merely a reaction to a specific application but rather a reflection of broader societal concerns about technology’s role in governance. The interplay of security, innovation, transparency, and ethics underscores the complexity of the issue, highlighting the need for a balanced approach that considers the diverse perspectives of stakeholders. Ultimately, the outcome of this debate will likely shape the future of AI integration within government, influencing how technology is utilized to serve the public good while safeguarding essential values.

Alternatives to DeepSeek AI for Government Use

As concerns regarding the DeepSeek AI application continue to mount, particularly in light of its potential security vulnerabilities, lawmakers are increasingly advocating for a ban on its use within US government devices. This situation has prompted a critical examination of alternative AI solutions that can fulfill similar functions while ensuring the integrity and security of sensitive government data. In exploring these alternatives, it is essential to consider both the capabilities of various AI applications and their compliance with stringent security protocols.

One promising alternative is the use of proprietary AI systems developed specifically for government applications. These systems are often designed with enhanced security features that align with federal regulations, thereby minimizing the risk of data breaches. For instance, platforms like IBM Watson and Microsoft Azure Government offer tailored solutions that not only provide advanced analytical capabilities but also adhere to the rigorous security standards required for government use. By leveraging these platforms, government agencies can harness the power of AI while maintaining control over their data and ensuring compliance with federal guidelines.

In addition to proprietary solutions, open-source AI frameworks present another viable option for government entities. Open-source platforms, such as TensorFlow and PyTorch, allow for greater transparency and customization, enabling agencies to modify the software to meet their specific security needs. This adaptability can be particularly beneficial in a government context, where the ability to audit and verify the code can significantly enhance trust in the system. Furthermore, the collaborative nature of open-source development fosters a community-driven approach to security, as vulnerabilities can be identified and addressed more rapidly by a diverse group of contributors.

Moreover, the integration of AI with existing cybersecurity measures can create a robust defense against potential threats. For example, AI-driven cybersecurity tools can analyze patterns and detect anomalies in real-time, providing an additional layer of protection for sensitive government data. Solutions like Darktrace and CrowdStrike utilize machine learning algorithms to identify and respond to cyber threats, thereby complementing traditional security measures. By adopting such integrated approaches, government agencies can enhance their overall security posture while still benefiting from the efficiencies that AI can provide.

Another alternative worth considering is the development of in-house AI capabilities. By investing in the training and development of government personnel, agencies can cultivate a workforce adept at creating and managing AI solutions tailored to their specific needs. This approach not only fosters innovation but also ensures that the resulting systems are built with security as a foundational principle. Furthermore, in-house development can mitigate reliance on external vendors, reducing the risk of data exposure associated with third-party applications.

As the debate surrounding the use of DeepSeek AI continues, it is crucial for lawmakers and government officials to prioritize the exploration of these alternatives. By focusing on solutions that emphasize security, transparency, and adaptability, government agencies can effectively leverage AI technology while safeguarding sensitive information. Ultimately, the goal should be to create a secure and efficient environment that harnesses the benefits of artificial intelligence without compromising the integrity of government operations. In this rapidly evolving technological landscape, the pursuit of secure alternatives to DeepSeek AI is not just prudent; it is essential for the protection of national interests and the trust of the public.

Future of AI Regulation in Government Technology

As the landscape of artificial intelligence continues to evolve, the call for regulatory measures surrounding its use in government technology has gained significant momentum. Recently, lawmakers have expressed concerns regarding the DeepSeek AI application, urging for a ban on its use within US government devices. This development highlights a broader conversation about the future of AI regulation, particularly in the context of national security and data privacy.

The DeepSeek AI app, designed to enhance data analysis and decision-making processes, has been met with skepticism due to its potential implications for sensitive government information. Lawmakers argue that the integration of such technology into government operations could expose critical data to vulnerabilities, particularly if the app’s algorithms are not transparent or if its data handling practices are not adequately scrutinized. This situation underscores the necessity for a comprehensive regulatory framework that governs the deployment of AI technologies in public sector applications.

In light of these concerns, it is essential to consider the implications of unregulated AI use in government. The rapid advancement of AI capabilities has outpaced existing regulatory measures, leaving a gap that could be exploited by malicious actors. As government agencies increasingly rely on AI for tasks ranging from cybersecurity to public service delivery, the potential risks associated with unregulated technology become more pronounced. Therefore, lawmakers are advocating for a proactive approach to AI regulation, emphasizing the need for standards that ensure the security and integrity of government operations.

Moreover, the conversation surrounding AI regulation is not solely about preventing risks; it also encompasses the promotion of ethical practices in technology deployment. As AI systems become more integrated into decision-making processes, the potential for bias and discrimination must be addressed. Lawmakers are calling for regulations that mandate transparency in AI algorithms, ensuring that these systems are fair and accountable. This focus on ethical considerations is crucial, as it aligns with the broader societal expectations for responsible technology use.

Transitioning from concerns about security and ethics, it is also important to recognize the role of collaboration between government entities and technology developers. Effective regulation will require a partnership approach, where policymakers work closely with AI experts to establish guidelines that foster innovation while safeguarding public interests. This collaborative effort can lead to the development of best practices that not only enhance the security of government technology but also encourage the responsible advancement of AI capabilities.

As discussions around the future of AI regulation in government technology continue, it is clear that a multifaceted strategy is necessary. This strategy should encompass not only the establishment of regulatory frameworks but also ongoing education and training for government employees on the ethical use of AI. By equipping personnel with the knowledge and skills to navigate the complexities of AI technologies, government agencies can better mitigate risks and harness the benefits of these innovations.

In conclusion, the call for a ban on the DeepSeek AI app serves as a catalyst for a broader dialogue about the future of AI regulation in government technology. As lawmakers push for measures that prioritize security, ethics, and collaboration, it is imperative that a balanced approach is adopted. This approach will not only protect sensitive information but also ensure that the deployment of AI in government serves the public good, fostering trust and accountability in an increasingly digital world.

Q&A

1. **What is the DeepSeek AI app?**

– DeepSeek AI is an artificial intelligence application designed for data analysis and information retrieval.

2. **Why are lawmakers calling for a ban on the DeepSeek AI app?**

– Lawmakers are concerned about potential security risks and data privacy issues associated with using the app on government devices.

3. **What specific risks are associated with using DeepSeek AI on government devices?**

– Risks include unauthorized data access, potential data leaks, and vulnerabilities to cyberattacks.

4. **Have any government agencies already banned the use of DeepSeek AI?**

– Yes, some government agencies have implemented restrictions or outright bans on the use of the app on their devices.

5. **What are the lawmakers proposing as a solution?**

– Lawmakers are proposing a comprehensive review of AI applications used on government devices and stricter regulations to ensure data security.

6. **What impact could a ban on DeepSeek AI have on government operations?**

– A ban could limit the tools available for data analysis and decision-making, potentially affecting efficiency and innovation within government operations.Lawmakers are advocating for a ban on the DeepSeek AI app for US government devices due to concerns over data security, privacy risks, and potential misuse of sensitive information. The call for action highlights the need for stringent regulations on AI technologies used within government systems to protect national security and ensure the integrity of governmental operations.